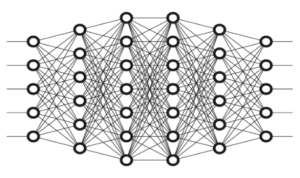

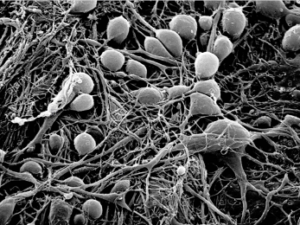

Above figure: A section of adult rat hippocampus stained with antibody, courtesy of EnCor Biotechnology Inc.

The Divide

In May 2019, after the International Conference for Learning Representation, ML Engineer Chip Huyen posted a summary of the trends she’d witnessed at the conference. Her 6th point on the list was “The lack of biologically inspired deep learning”. Indeed, across 500 talks, only 2 were about biologically inspired Artificial Neural Network (ANN) architectures. This was nothing new. For years, the conversation between Neuroscience and AI has been disappointingly unfruitful.

Why is this a shame?

Well, to begin with, a quick look at an ANN next to a real system of brain neurons shows that something is a little off.

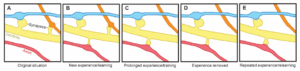

The ANN we use within computers are orderly and sequential, while the webs of brain neurons are chaotic. This stark contrast exists not only structurally, but also behaviourally. That is to say, there are behaviours within the brain that are not captured by ANN. Take for example the growth of Dendritic Spines in relation to new experiences:

And there is another reason the lack of biological inspired AI is disappointing: AI research is in a rut.

The Holy Grail

Across most of AI research, the “Grand Slam” would be, without a doubt, Artificial General Intelligence, usually abbreviated as AGI. There is no one single definition for the exact threshold at which AI becomes AGI (and, to be fair, a perfect definition is likely impossible). The idea is still easy to summarize: an AI system which can interpret the world and think critically and emotionally in a similar fashion, and to a similar cognitive degree, as humans.

There have been fantastic gains across all sub-domains of AI in the past decade, but as of late our quest for AGI has been hindered by three primary ‘sticking points’.

1) Deeper does not equal smarter

When the “Deep Learning Revolution” began in 2012 with AlexNet, it was immediately clear that deep 10+ layer ANN were the future. However, depth only helps to a point. The benefits of more layers decrease as you add more, eventually flattening out. Even past 1000 layers, although performance is good, we don’t arrive at anything close to human level thought ([He et al. 2016]).

In 2018, AI heavyweight Yann LeCunn even went as far as saying “Deep Learning is dead”.

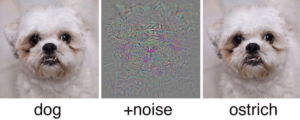

2) Contemporary AI is fragile

AI is easy to break. David Cox, director of the MIT-IBM Watson AI Lab, gives an example of this in a [2020 MIT talk]. Specifically, he talks of Adversarial Attacks, in which a network can be tricked into thinking it is seeing something which it, in fact, is not. Take this example of a friendly dog, which an ANN correctly identifies as a dog. However, an Adversarial Algorithm can adjust the pixels in such a way that the network believes it is instead looking at another animal – here, an ostrich (Szegedy et al. 2014).

The difference is strikingly subtle. Certainly any human would still agree the 3rd picture is of a dog.

A similar technique can be used to trick ANN into being unable to identify people in video

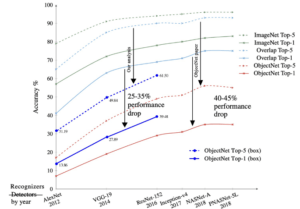

3) AI Lacks Robustness

In the field of computer vision, it’s fairly common to hear of AI performing at “super-human” levels (ex. Langlotz et al. 2019). But, to take another point from David Cox, this is somewhat misleading. The primary reason is that, in competitive image datasets (such as ImageNet), images tend to be framed quite generously: properly centred, correctly oriented, well lit, in a familiar setting, and overall very ‘typical’. When we instead go for datasets containing more comprehensive examples (a knife lying on the side of a sink, a chair laying on its side, a T-shirt crumpled on a bed, etc.) the drop in performance is drastic. Here we see a 25-40% performance drop when moving from ImageNet to a more comprehensive dataset (ObjectNet).

Source: Borji et al. 2020

The Brain Gain

In December 2019, an AI research body in Montreal (imaginatively named Montreal.AI) hosted an AI debate between Yoshua Bengio and Gary Marcus. What made this talk stand out is that, while Yoshua is an AI specialist, Gary’s domain is cognitive science.

In December 2020, on account of the first debate being well received, the company hosted a second debate. Instead of a two-way debate, the panel consisted of 17 speakers. About one third were AI specialists, while the other two thirds consisted of neuroscientists and psychologists.

What transpired in both of these multi-disciplinary debates was quite astonishing. The ideas and expectations flowed, often fuelled by the AI side, but regularly put in check by the neuroscience side. Additionally, insights into potentially fruitful new directions more often than not came from the psychiatrists, based on how humans learn and develop. In sum, the interdisciplinary advantage shined through brightly. I would like to highlight some of the ideas that stood out the strongest.

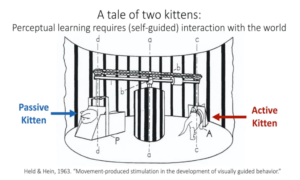

The Cat Carousel

One of the ideas brought forward by FeiFei Li of Standford University is the historical ‘Cat Carousel’ experiment (Held & Hein, 1963). One kitten is free to explore, while a second kitten is tethered to the first.

They both explore their surroundings, however only one of them actually does the moving. The experiment shows that only the active kitten develops normal depth perception, while the passive kitten leaves the experiment uncoordinated and clumsy. The lesson is that learning must be accompanied by movement. Barbara Tversky, professor at Columbia University, also added an example: humans often move their hands when watching lectures, drawing shapes in physical space to better memorize concepts.

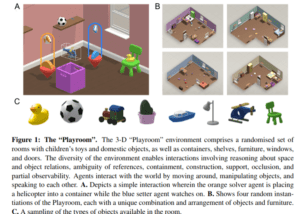

AI researchers have made note of this. At DeepMind, researches have created a simulated “Playroom” in which AI can interact with its surroundings.

Reproduced from DeepMind 2020.

The AI system is fed both visual input regarding its surrounding, as well as commands in natural language. In turn, it translates these into decisions regarding how to move and how to respond. One may ask which architecture allows for this? The answer is rooted in Neurosymbolic AI.

Neurosymbolic AI

The concept of Neurosymbolic AI originated during the very first steps into AI (see, for example, McCarthy et al. 1955) and Minsky, 1991). The researchers hoped to create a system capable of storing and retrieving information related to objects and concepts. For example, if asked “what do you know about apples?” the system could reply that they are green, red, or yellow, that they can be sweet, sour, or tart, and that they can be used to make pies (and so on and so forth).

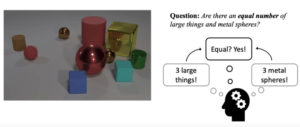

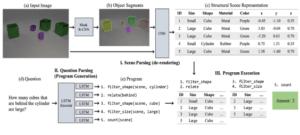

Some of the basic ideas of Neurosymbolic AI are now being revamped to tackle more complex AI challenges – challenges that convolutional neural networks are unable to tackle alone. Take for example this question:

Reproduced from this lecture by David Cox.

As a human, we would likely approach this problem with 3 steps: firstly counting the number of large objects, then the number of metal spheres, and then quickly checking that the two numbers match. In an attempt to recreate this behaviour, a Neurosymbolic AI system may look something like this:

Reproduced from this lecture by David Cox.

The network contains a computer vision component which segments the image, and then classifies the objects within the image into a table. Simultaneously, an NLP routine translates the question into a number of programming steps, which can be applied to the table. However, in order to train this section of the network, the programming steps must be differentiable (in order to apply gradient descent). Hence, this step within the Neurosymbolic AI system is sometimes called Differential Programming.

Thinking Fast and Slow

When given an unfamiliar problem similar to the above one, our mind works slowly and deliberately. Alternately, with emptying the dishwasher, or tying our shoelaces, we can operate quickly without much thought. This two-tier behaviour is what Daniel Kahneman describes as System 1 (fast, instinctive behaviour) and System 2 (slow, logical processing). Sometimes, this fast/slow dichotomy can be quite puzzling. Take this for example:

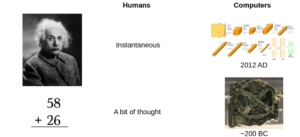

If asked to recognize a face, humans can instantaneously answer. It feels as though this happens without even thinking. But given a math problem we have to slowly think, working through the calculation step-by-step.

But, curiously, computers have the opposite skill-set. Facial recognition is a relatively recent skill, which has reached maturity only in the past two decades. It generally requires fairly heavy-duty architecture. On the other hand, basic arithmetic calculators have been around since antiquity. Take, for example, the Antikythera Mechanism), a very primitive computer dating from around 200 BC.

So, why is this the case?

Evolutionary Priors

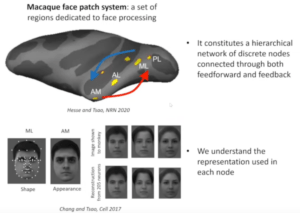

Perhaps unsurprisingly, arithmetic was historically not as useful to us as facial recognition. Being able to recognize friends from foes was much more important to us than adding numbers. Some recent research has shed light on exactly how this evolutionary hard-coded facial recognition operates, at least in monkeys.

Data Reproduced from Doris Tsao’s Montreal.AI talk, December 2020.

What exactly have we found? The system responsible for Face Recognition is fairly compact, consisting of roughly 200 neurons. The neurons project down to a roughly 50 dimensional feature space, in which each dimension corresponds to a multitude of actual facial features (eg. hairline, eye position, lips, etc.), as opposed to just one. Fascinatingly, researchers can work backwards from the MRI scan of the neuron activations and reconstruct the face the monkey is looking at without actually seeing it themselves!

Understanding this system is a huge step forward in understanding what are called ‘priors’. That is, systems which have been ‘pre-loaded’ into our brains by evolution. The question is: where should we draw the line? For example, should an AI system come with an entire Neurosymbolic AI architecture as a prior, or should this be developed through learning over time? That is, born of some more simple and fundamental system.

There is still no consensus, and a lot more work, in neuroscience, psychology, and AI, will be needed before an answer is clear.

Let’s Talk Numbers

Can we gauge how close we are to AGI by looking at the numbers? That is, how close is digital architecture to that of our brain? What if we consider the number of operations/second?

This would suggest we are about a factor of 100 away from AGI. What if we instead looked at the number of neurons?

- Brain: ~1.5×10¹⁴ Synapses (roughly equivalent to parameters) (Source)

- Microsoft ZeRO & DeepSpeed: 10¹¹ Parameters (Source)

So here it looks closer to a factor of 1000. But these numbers obscure the fact that the correlation between brain metrics and intelligence is currently a complete black box. To illustrate this, take for examples the fact that elephants have roughly three times more neurons than humans, and likely synapses too.

There were those who believed the intelligence of humans came from the number of neurons in their cerebral cortex – an area associated with consciousness. However, orcas have more than twice the number of cerebral cortex neurons as humans.

So, all this to say, the numbers do very little to shed light on how far we are from AGI. We currently have no idea what is so special about the human brain to convey the intelligence required to become the dominant species, as opposed to elephants and orcas.

The Four Missing Pillars

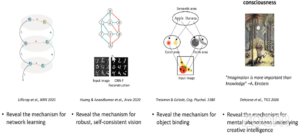

What would represent a good first step towards AGI? Well, Doris Tsao offers four interesting neuroscience avenues, each of which could hold some keys towards unlocking AGI.

Reproduced from Doris Tsao’s Montreal.AI talk, December 2020.

The four directions are:

- A better understanding, from a neuroscience point of view, of exactly how the human mind learns.

- Uncovering the apparent robustness of human vision. That is to say, our lack of susceptibility to Adversarial Attacks, ability to overcome noise, and skill at recognizing objects in a wide range of settings and orientations.

- Understand the Binding Problem: how the mind separates different objects in our field of vision, and additionally combines multiple inputs into a single unified ‘experience’.

- Understand what exactly is going on when we ‘see’ our thoughts (imagination, dreams, consciousness).

The Pessimists

Calling Christof Koch, president and chief scientist at the Allen Institute for Brain Science, a pessimist is perhaps unfair. Maybe ‘realist’ would be better.

Regardless of which title you accord, Christof Koch advocates that looking to neuroscience for help with AI is a hopeless pursuit. He points out some humbling facts about the human brain.

- Neuroscientists have identified over 1000 different types of neurons. In ANN we usually treat all neurons as identical in their functioning.

- Some neurons have “dendritic trees” which have on the order of 10,000 inputs and outputs, larger than what we find in most ANN.

- Current neuroscience is unable to simulate functional behaviour when studying systems on the order of 100 neurons (eg. C. Elegans, see figure below).

- In trying to do so with the human brain, we would be working with a system on the order of 10¹¹ neurons.

Although we now have a complete wiring diagram of the C. Elegans nematode neurons, we have no idea how the system translates to behaviour. (Source: Varshney et al. 2011)

And there’s another angle here: there is no reason to believe the human brain is in any away ‘optimal’. Our brain has evolved under a number of different constraints, and the set of constraints for AI systems will be completely different.

- Metabolic Constraints: The human brain must satisfy a basic need for nutrients.

- Material Constraints: The human body is mostly composed of carbon, hydrogen, oxygen, and nitrogen.

- Evolutionary Constraints: The human brain was shaped to optimize performance in the context of very specific conditions, many of which existed only millions of years ago.

To get a feel for how many evolutionary artifacts still linger in our minds, take a quick look at the list of cognitive biases

we have identified. There is no reason to think it necessary to replicate these biases in AI systems when pushing towards AGI.

Bringing it all Together

Even though Christof Koch remains a pessimist, he does concede one point.

In our quest for AGI, the relationship between Neuroscience research and AI research does not have only two possible presets – that is, being “married together” and “never talking”. In sum, AI researchers should both avoid ignoring the insights coming from Neuroscience and Psychology, and simultaneously should not aim to exactly replicate said insights within digital hardware. It is not necessary to refer to the brain when designing AI, and indeed we should view AGI not as a chance to replicate our human mind, but a chance to reinvent it. That being said, when we find ourselves stumped, we shouldn’t forget that we already have a working intelligence, built on physical hardware. The interdisciplinary approach can be both beautiful, and fruitful. So why not peek into the mind from time to time?

Do you find this stuff cool? Why not sign up for the 3rd Montral.AI debate, happening December 2021?