“The temptation to form premature theories upon insufficient data is the bane of our profession.”

DATA ENGINEERING

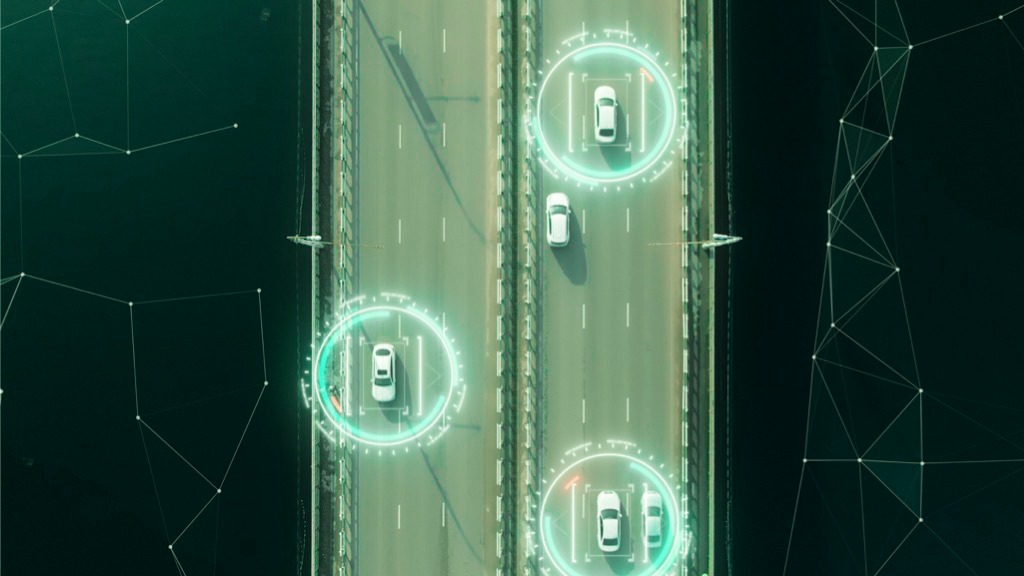

Due to the ever-increasing growth of data collection, new ways to store, queue, and gain insights from digital information have been created in the past decade. Prohibitive costs involved in pushing individual supercomputers faster has led to a paradigm shift towards horizontal scaling (many computers working in parallel). Big Data Engineers create and manage these new tools, and are responsible for developing, maintaining, evaluating, and testing Big Data Solutions.

Why work with synvert Data Insights?

Big Data is constantly expanding with new technologies, platforms, and methodologies. At synvert Data Insights our Data Engineers are at the forefront of the data domain, helping collect, curate, and aggregate data from various sources. We’re experienced software engineers who specialize in Big Data and empower organizations to make data-driven decisions and leverage business.

WHAT WE DO

- Collaborate with stakeholders to design and implement data-related assets that align them with business requirements

- Develop, construct, maintain, and test architectures and complex systems

- Handle data acquisition, storage management, and data modeling

- Create and optimize core analytics infrastructure that enables other data functions to deliver insights

- Take care of data governance, ensuring security and privacy

- Build automated data pipelines and monitoring services to improve data reliability, efficiency, quality, and security

- Create CI/CD pipelines for various cloud stacks

- Use best practices and modern tools for data processing and scalability

- Do data processing, wrangling-cleansing, and transformation, to ensure cost and speed efficiency

- Prepare data for predictive and prescriptive modeling, and deploy machine learning models, using cloud services

Identify the

Problem with

Big Data

“Big data is a field that treats ways to analyse, systematically extract information from, or otherwise deal with data sets that are too large or complex to be dealt with by traditional data-processing application software.” The keywords here are ‘too large or complex’ and ‘traditional data-processing’. The total amount of data created, captured, copied, and consumed in the world is increasing rapidly in 2020 and it is forecasted to double for 2023. Due to the deluge of information we produce, ‘traditional data-processing’ is utterly inefficient in the analysis, filtering, understanding and value creation from all this data.

We support your data projects – from idea to production.

Value is only created by turning initiatives into concrete actions. Towards this goal, we assist by providing a strong technical background, as well as extensive corporate experience. We are ready to help you from start to finish.