ArgoCD, a powerful GitOps tool, simplifies the continuous delivery and synchronization of applications on Kubernetes clusters. In this blog post, we focus on deploying ArgoCD with Terraform on a private Azure Kubernetes Service (AKS) cluster. By utilizing Terraform, we provision the infrastructure and AKS cluster, and then deploy ArgoCD. ArgoCD is connected to a repository that holds the cluster’s configuration and application definitions, enabling automated deployments based on changes to the repository. This approach ensures that the cluster’s desired state is consistently maintained.

Why ArgoCD?

ArgoCD provides a declarative approach to managing Kubernetes deployments. It allows you to define your desired application state in Git, and ArgoCD takes care of ensuring that your applications are deployed and maintained according to that desired state.

We will explore how ArgoCD can be used to manage network policies, deploy additional applications such as Cert-Manager and Ingress Nginx, handle secrets, and perform other AKS-specific configurations. This allows for a centralized and automated approach to managing your AKS cluster, reducing manual intervention and ensuring consistency.

By leveraging the capabilities of ArgoCD, we can automate the deployment of applications, enforce version control, and easily roll back changes if needed. This blog post will guide you through the process of setting up ArgoCD on a private AKS cluster, providing you with a powerful GitOps-based deployment solution.

Preparing the Infrastructure

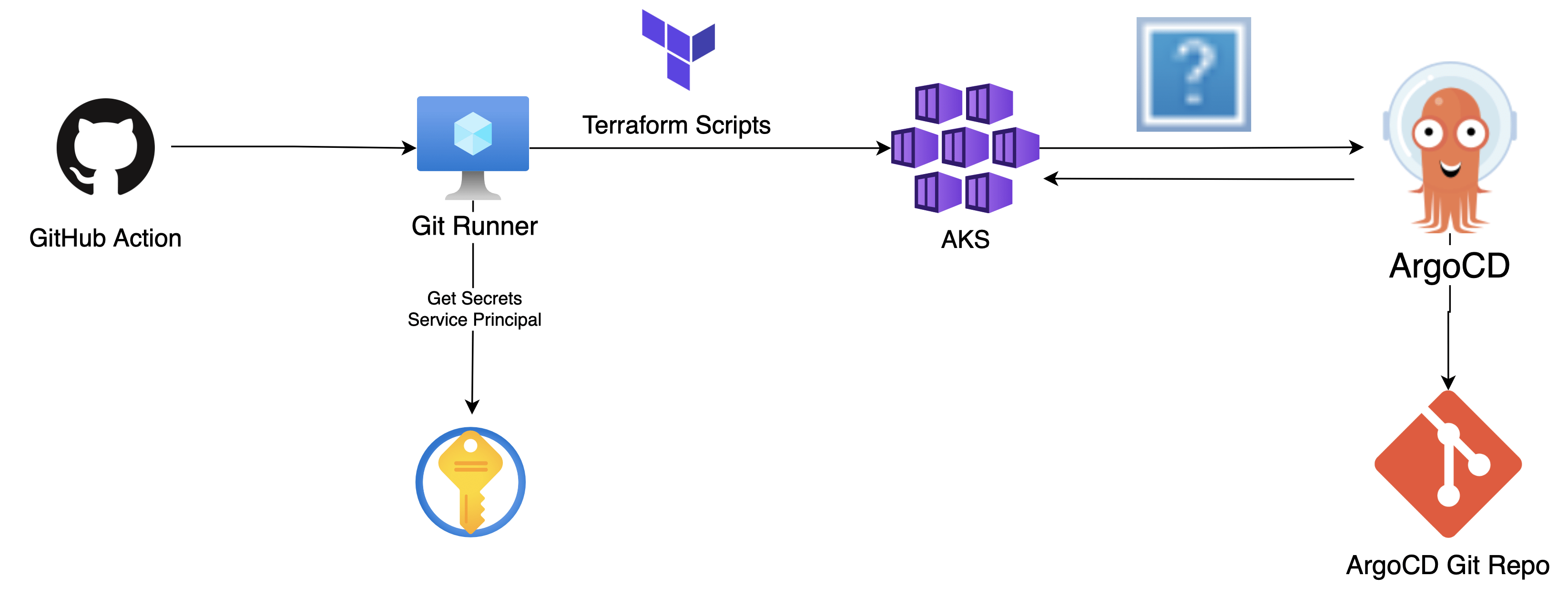

Before we can deploy ArgoCD on our private AKS cluster, we need to set up the necessary infrastructure. This involves creating two resource groups: “Resource Group Deployment” and “Resource Group AKS”.

Resource Group Deployment

The purpose of this resource group is to house the resources required for deploying the private AKS cluster. It includes a Virtual Machine (VM) with a GitHub runner, a Key Vault to securely store secrets, and a Storage Account where we will save the Terraform state for the AKS infrastructure.

Resource Group AKS

The second resource group, “Resource Group AKS,” will contain the AKS cluster itself, along with additional resources such as a Key Vault and Storage Account that can be used by the AKS cluster. It will also include a Container Registry to store private images and a private DNS zone for the AKS cluster.

Peered Virtual Networks

To establish a direct connection to the virtual network where our private AKS cluster resides, the virtual networks of both resource groups will be peered. This allows for secure communication between the AKS cluster and other resources in the “Resource Group Deployment” resource group.

Service Principal Preparation

Before deploying the AKS cluster, we need to prepare a service principal. This service principal will be assigned the Contributor role at the subscription level or the Contributor role on the “Resource Group AKS” to enable AKS resource deployment. Additionally, the service principal must have the Blob Data Contributor role on the Storage Account in the “Resource Group Deployment” to save the Terraform state.

Deployment Steps

The first step in the deployment process is to run a Terraform script from your local computer. This script will deploy the “Resource Group Deployment” and establish the connection of the Virtual Machine (GitHub runner) to GitHub. Once deployed, we will save the service principal ID and secret in the Key Vault for use in the next step.

Next, the AKS resources will be deployed using a GitHub Workflow. The Virtual Machine (GitHub runner) will read the service principal secrets from the Key Vault and utilize them to authenticate and deploy resources to the “Resource Group AKS” resource group.

We will not go into detail on how to deploy Azure resources with Terraform, as the process is fairly straightforward and there are numerous examples available. However, it is important to highlight some key considerations when deploying the AKS cluster using Terraform.

When deploying AKS, ensure that you enable the necessary configuration options to support the desired functionality. These options may include enabling workload identity, CSI blob driver, OIDC issuer, RBAC (Role-Based Access Control), and specifying the admin group ID.

The detailed information how to deploy AKS with Terraform you can find in the Microsoft documentation

In the example below we pointed to the necessary configuration options that should be enabled:

# # Create an Azure AKS cluster

resource "azurerm_kubernetes_cluster" "aks_cluster" {

...

...

# Enable workload identity

workload_identity_enabled = true

private_cluster_enabled = true

private_cluster_public_fqdn_enabled = false

# Enable OIDC issure

oidc_issuer_enabled = true

# Enable CSI blob driver

addon_profile {

azure_blob_fuse {

enabled = true

}

}

# Enable RBAC and specify the admin group ID

azure_active_directory_role_based_access_control {

managed = true

admin_group_object_ids = ["<admin-group-object-id>"]

azure_rbac_enabled = true

}

}

ArgoCD deployment

Once our AKS cluster is deployed with the necessary configuration options enabled, we can proceed with the deployment and configuration of ArgoCD. In this section, we will focus on the deployment process and the structure of the ArgoCD repository.

ArgoCD repository structure

The ArgoCD deployment requires us to have a repository prepared with the necessary configurations.

Here’s an example of the repository:

├── app-of-apps │ ├── Chart.yaml │ ├── templates │ │ ├── argocd-project.yaml │ │ └── argocd-applications.yaml ├── argocd-apps │ ├── Chart.yaml │ ├── templates │ │ ├── argocd.yaml │ │ ├── argocd-config.yaml │ │ ├── argo-workflows.yaml │ │ ├── argo-events.yaml │ │ ├── external-operators.yaml │ │ ├── cert-manager.yaml │ │ ├── ingress-nginx.yaml │ │ └── ... ├── argocd-helm │ ├── Chart.yaml │ ├── templates │ └── values.yaml ├── argocd-config │ ├── Chart.yaml │ ├── templates │ │ ├── argocd-ingress-rule.yaml │ │ ├── argocd-rbac-cm.yaml │ │ └── ... │ └── values.yaml └── ...

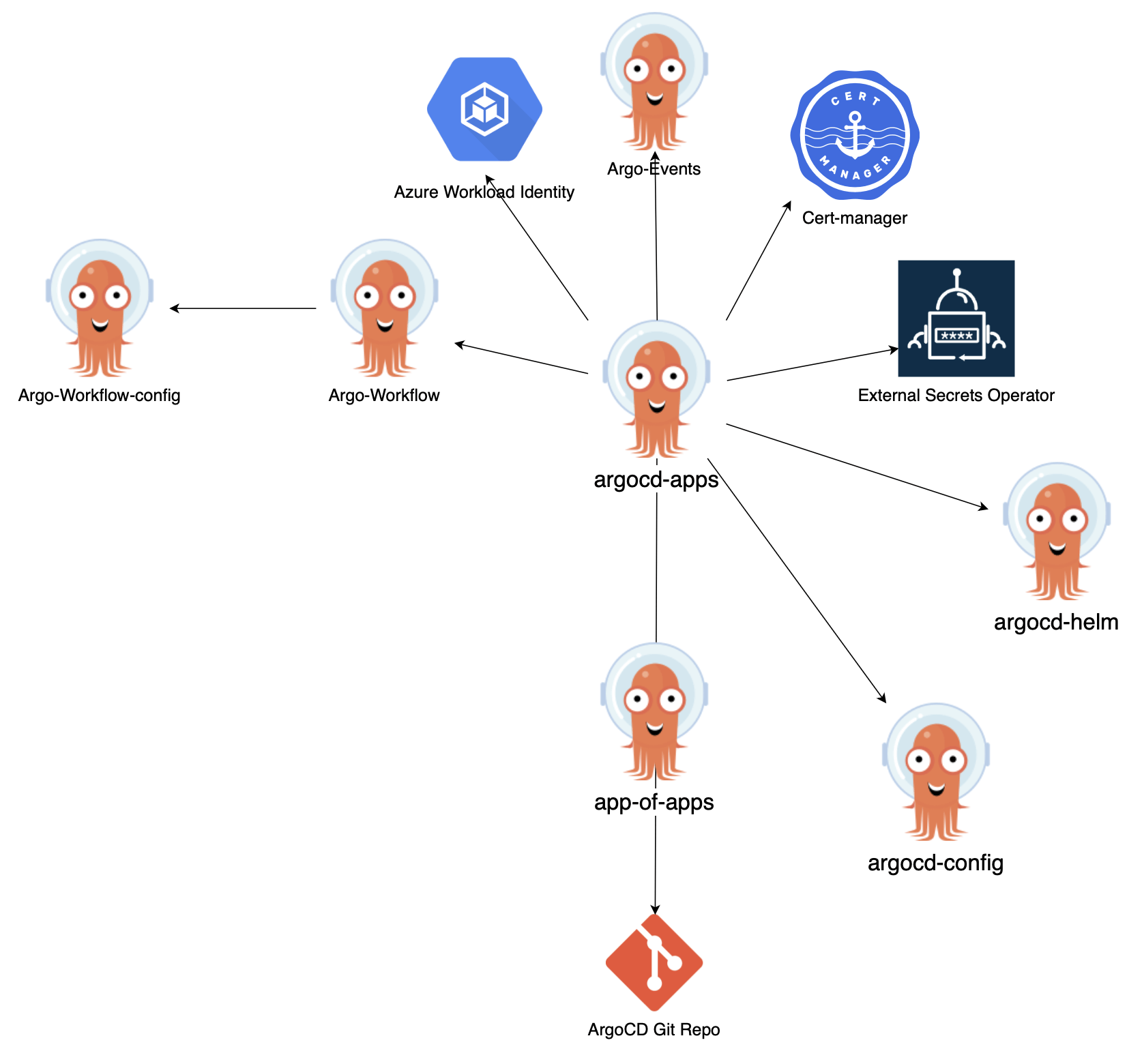

In this structure, we have a folder named app-of-apps which contains the Helm chart for creating the “argocd” project inside ArgoCD. The argocd-project.yaml template defines the project and its configuration. An ArgoCD project serves as a logical grouping of applications and provides a way to organize and manage them within ArgoCD. It allows for fine-grained access control and enables different levels of permissions for different teams or users.

Additionally, the argocd-applications.yaml template points to the argocd-apps Helm chart. The argocd-apps folder contains the Helm chart for the argocd-apps application. An ArgoCD application represents a specific deployment or configuration that needs to be managed by ArgoCD. In this case, the argocd-apps application includes templates for various applications required to run ArgoCD, such as argocd, argocd-config, argo-workflows, argo-events, external-operators, cert-manager, ingress-nginx, and more.

When ArgoCD synchronizes with the repository, it will automatically deploy these applications and configurations onto the AKS cluster, ensuring that the desired state of the cluster is maintained. This allows for a declarative approach to managing the infrastructure and applications, where changes made to the repository are automatically reflected in the deployed environment.

This diagram shows an example of the nested dependencies between applications in ArgoCD.

Using the suggested repository structure as a blueprint, you can adapt and extend it to suit the specific needs of your project.

Deploying ArgoCD helm chart with Terraform

The ArgoCD application serves as the core component of our deployment process. Once we have provisioned the AKS infrastructure, the next step is to deploy ArgoCD, which will seamlessly synchronize with the repository and deploy all the necessary applications and configurations. To streamline the deployment of ArgoCD using Terraform, we can leverage the helm_release resource. Below is an example illustrating this approach. Please note that it’s crucial to ensure that the version of ArgoCD specified in the Terraform script matches or is compatible with the version specified in the chart.yaml file of the argocd-helm in the repository.

resource "helm_release" "argocd" {

name = "argocd"

repository = "https://argoproj.github.io/argo-helm"

chart = "argo-cd"

# version should match the version in chart.yaml of argocd-helm

version = "5.33.3"

namespace = "argocd"

create_namespace = true

}

Connecting repository to ArgoCD

To configure the private repository for ArgoCD synchronization, we can use the Terraform resource kubernetes_secret. This resource allows us to create Kubernetes secrets directly using Terraform.

Here’s an example of how we can use the kubernetes_secret resource to configure the repository for ArgoCD synchronization:

resource "kubernetes_secret" "argocd_repo" {

metadata {

name = "repo-argocd"

namespace = "argocd"

labels = {

"argocd.argoproj.io/secret-type" = "repository"

}

}

# data describes the secrets that will be created. Git_url, git_token and git_user

# can be defined as action secrets in GitHub and referenced in workflow environments with

# with prfix TF_VAR_<action secret name>

data = {

type = "git"

url = local.git_url

password = local.git_token

username = local.git_user

}

depends_on = [ helm_release.argocd ]

}

The label “argocd.argoproj.io/secret-type” = “repository” in the metadata section instructs ArgoCD to treat this secret as the repository connector, resulting in its immediate addition to the list of available repositories.

Deploying the first ArgoCD application

Now that we have successfully connected the repository to ArgoCD, we can proceed with creating our first application that will utilize the app-of-apps Helm chart. While we have briefly mentioned the repository structure earlier, let’s dive into it in more detail since ArgoCD plays a central role in our deployment process.

ArgoCD is already installed on the AKS cluster, but it requires specific configuration and synchronization with the repository. To establish this connection, we need to create an ArgoCD application. This application will encompass crucial information such as the repository details, the application type (helm, kustomize, or plain YAML files), and any values files required for customization. Since we are utilizing helm charts in our deployment, we must provide a values file containing all the necessary customization properties for the applications within our repository.

To simplify application management and configuration, we have organized the argocd application and its corresponding configuration into separate helm charts: argocd-helm and argocd-config, respectively. Once we add the first application, app-of-apps, to ArgoCD, it will automatically synchronize with the repository and initiate the application deployment process.

To deploy the first application using Terraform, we can leverage the kubernetes_manifest resource. This resource enables us to apply Kubernetes manifests directly through Terraform, streamlining the deployment process.

Here’s an example of how we can use the kubernetes_manifest resource to create the first application in ArgoCD:

resource "kubernetes_manifest" "application_argocd_apps" {

manifest = {

"apiVersion" = "argoproj.io/v1alpha1"

"kind" = "Application"

"metadata" = {

"annotations" = {

# sync-wave -1 means that the resource will be deployed first by argocd

"argocd.argoproj.io/sync-wave" = "-1"

}

"finalizers" = [

"resources-finalizer.argocd.argoproj.io",

]

# defining the name and location for the first application

"name" = "app-of-apps"

"namespace" = "argocd"

}

"spec" = {

"destination" = {

"namespace" = "argocd"

"server" = "https://kubernetes.default.svc"

}

"project" = "default"

"sources" = [

{

# The first source provides the path to the app-of-apps directory

# within the repository and the target revision to track.

"helm" = {

"releaseName" = "app-of-apps"

"valueFiles" = [

"$values/values-argocd-project.yaml",

]

}

"path" = "app-of-apps"

"repoURL" = local.git_url

"targetRevision" = "HEAD"

},

{

# This source points to the values file that contains the helm chart custom values

"ref" = "values"

"repoURL" = local.git_url

"targetRevision" = "HEAD"

},

]

# The syncPolicy section defines the synchronization behavior,

# including automated pruning and self-healing capabilities

"syncPolicy" = {

"automated" = {

"prune" = true

"selfHeal" = true

}

}

}

}

depends_on = [ kubernetes_secret.argocd_repo ]

}

As ArgoCD synchronizes with the repository, it will detect the changes in the app-of-apps chart and automatically initiate the deployment process for all the applications specified within it. This allows us to define and manage our entire AKS infrastructure and related applications using GitOps principles.

Managing AKS with GitOps and ArgoCD

The core concept behind our approach is to utilize ArgoCD as the central configuration tool for our AKS cluster. Rather than manually applying policies and configuring applications, we can leverage ArgoCD’s automation capabilities to ensure consistency and reliability throughout the deployment process.

With ArgoCD, we can define AKS configurations such as network policies, security policies, roles, secrets, and more as ArgoCD applications. These applications encapsulate all the necessary configuration details, including the repository and customization values. The repository itself can contain plain YAML Kubernetes manifests or be a Helm chart with configurable values, allowing us to reuse the same application definition with different configurations.

By structuring our applications within ArgoCD’s app-of-apps or nested applications, we can maintain a declarative approach to application deployment. This enables us to easily manage updates and rollbacks, ensuring that our AKS cluster remains in the desired state at all times.

Conclusion

Using ArgoCD as the central configuration tool not only simplifies the deployment process but also promotes consistency and reliability. By defining AKS configurations as ArgoCD applications, we can enforce desired policies, easily track changes, and revert to previous configurations if needed.

With ArgoCD’s automation capabilities, managing AKS configurations becomes more efficient, allowing us to focus on delivering applications rather than manual configuration tasks. As a result, we can accelerate the deployment process, reduce human errors, and ensure a high level of consistency and reliability throughout our AKS cluster.